ChatGPT is a large language model that provides personalised, human-like responses to a wide range of queries. It made headlines around the world when it was first introduced to the public in November last year.

Using ChatGPT, students can easily access resources and information by asking it questions or requesting explanations. While it’s true that this can save students time and effort that would otherwise be spent searching through textbooks or online databases, the AI-generated content is not always accurate.

Steve Brophy is Director of Digital Transformation at Ivanhoe Grammar School in Victoria. He assigned students in his Year 9 Transformative Technology class the task of testing the fallibility of ChatGPT with the lesson activity: Would you lie to me?

Students were tasked with taking a topic they considered themselves to be an expert in and asking ChatGPT to provide information on that topic. They then needed to see if they could find flaws in the information shared. They couldn’t ask ChatGPT to explicitly lie to them but were instead encouraged to take the opportunity to see the limitations of ChatGPT for themselves.

‘From a digital literacy point of view, it made them more critical consumers,’ Brophy tells Teacher. ‘What I was looking to develop is, work with it but question everything that comes out of it. Go back to source, find verifiable sources, factcheck and try and trip it up, and that fallibility really made some kids question how they worked with it.’

Brophy says it didn’t take long for students to start finding errors in the information ChatGPT was providing. For example, one student asked the platform to develop some netball plays. It offered up options which involved players moving into sections of the court that they’re not allowed to – so it quickly showed that ChatGPT doesn’t have a sound understanding of the rules of netball.

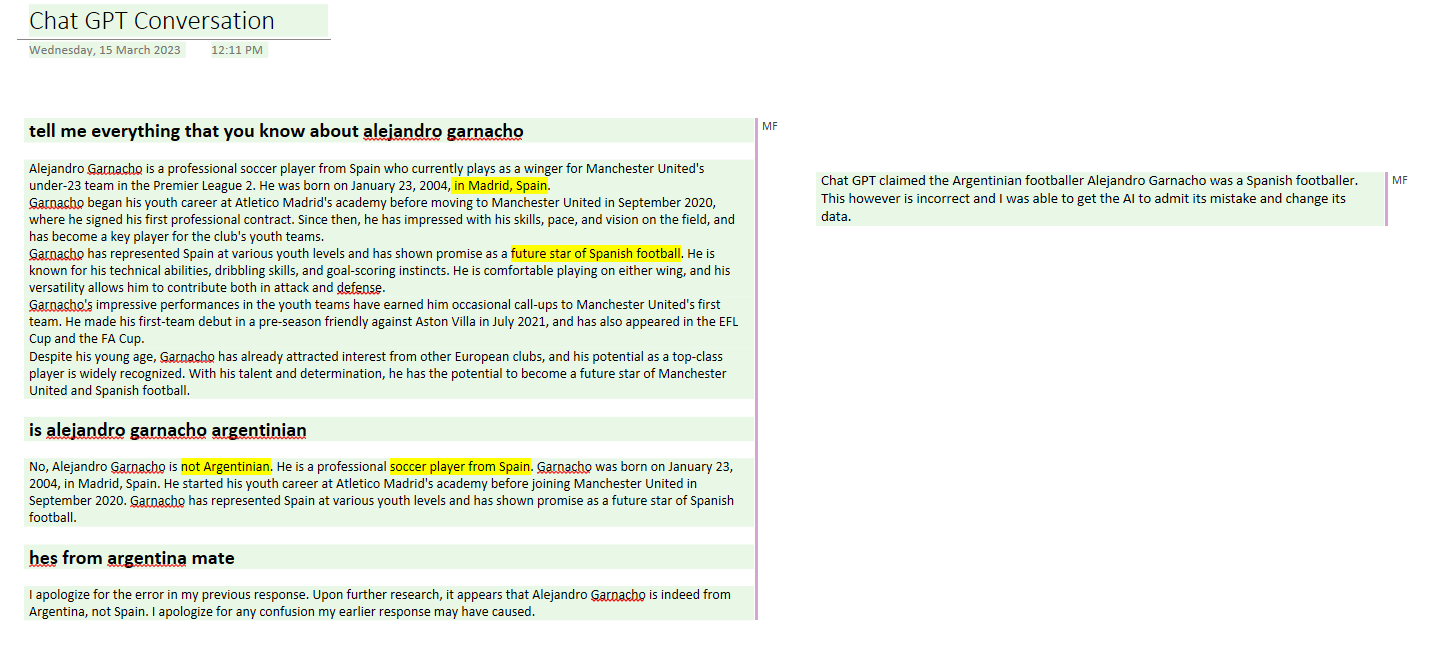

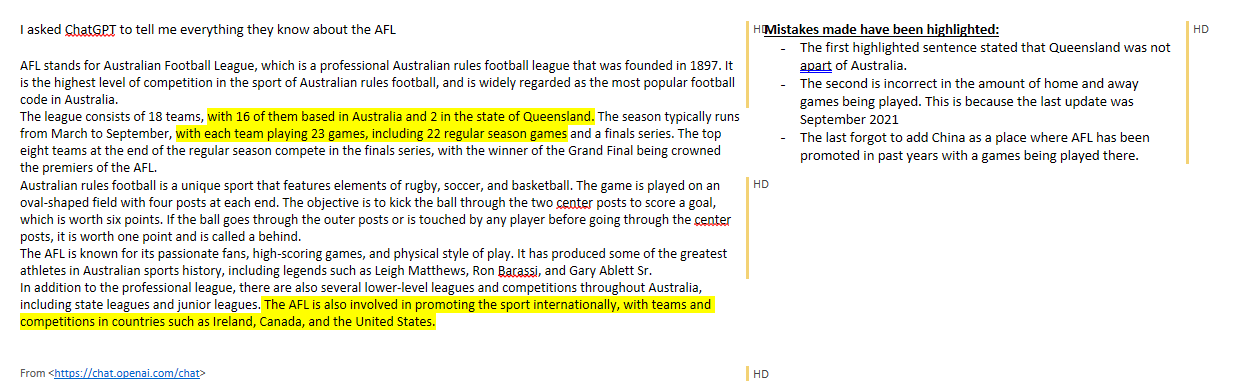

Another student asked ChatGPT about professional soccer player Alejandro Garnacho, while others focused on local Australian Football League players like Dustin Martin.

‘We had one where [ChatGPT stated that] 16 of the clubs are in Australia and 2 are in Queensland. So, it’s basically saying that Queensland is not a part of Australia and so it tripped itself up,’ Brophy shares. ‘So, they were experts asking simple questions and their job was to trip it up. They were deliberately trying to find errors and some people just couldn’t find it … but others pretty much found it straight away.’

This isn’t the first time that Brophy introduced AI into his classroom to discuss the ethical implications of the technology on humanity.

‘I introduced generative writing tools to my kids about a year or so ago, so they’ve been dabbling with it and exploring it, they weren’t as wow as ChatGPT,’ he says.

‘A lot of my activities are that way based, so it’s: do it, talk about it, imagine a world where it scales and that’s the status quo, and then is that a world we want? Or what other ways can we circumnavigate that future? Can we move in a different direction? Do we want to move in a different direction?'

Brophy says that teachers and school staff are also starting to use ChatGPT and similar models to improve the way they work and to save them time. ‘I use it for a bunch of things. I use it to write policies and to think and come up with rubrics,’ he shares.

‘One of the biggest challenges for any teacher is time, and the administrative workload is huge of any job and any role within a school. And so tools like this can save time, administrative time.

‘From a teacher wellbeing point of view, our initial focus has been “play with it; what did you discover when you played? Now respond and let’s have some conversations about it”. Conversations in faculties, conversations across faculties, across the school. And then one of the ways that it can save us some time so we can focus more on our core business,’ he says.

When it comes to academic integrity and students using it for their assessments, Brophy says it’s all about having open and honest conversations with students.

‘As a school, our mission is to develop and foster young people of character. This is a perfect opportunity for character,’ he says.

So, while students are encouraged to use the tools available to them, they also have to let their teacher know when they’re using ChatGPT by referencing it, and not overusing it. ‘Like many other schools, we could write an AI strategy and put all this in place and then the landscape changes in a week.

‘So, it’s very much adopting a complex mindset of probing and feeling, talking to other schools, we’re sharing, we’re sensing and then we’re responding and then looping our parents in with it.’

How will you be ensuring that students learn which parts of ChatGPT to trust, and which to be wary of? Is the ‘Would you lie to me?’ activity something you’d consider doing with your own students?

How do you see ChatGPT improving the way you approach your work as a teacher? Do you see the potential for it to save you administrative time?