When it comes to designing student assessments, teachers can often get swayed by their instinct of being a topic expert over the actual requirements of the assessment. In this article, ACER India Research Fellow Bikramjit Sen discusses three common mistakes that teachers may make while creating a science assessment.

Teaching science offers a plethora of creative opportunities, from whiteboard teaching to exciting science laboratory experiments to assessing children through innovative ways. Having said that, a science teacher must address the challenge of accomplishing all those exciting possibilities within the set timeframe of an academic year.

Here are three things you should keep in mind while developing classroom assessments.

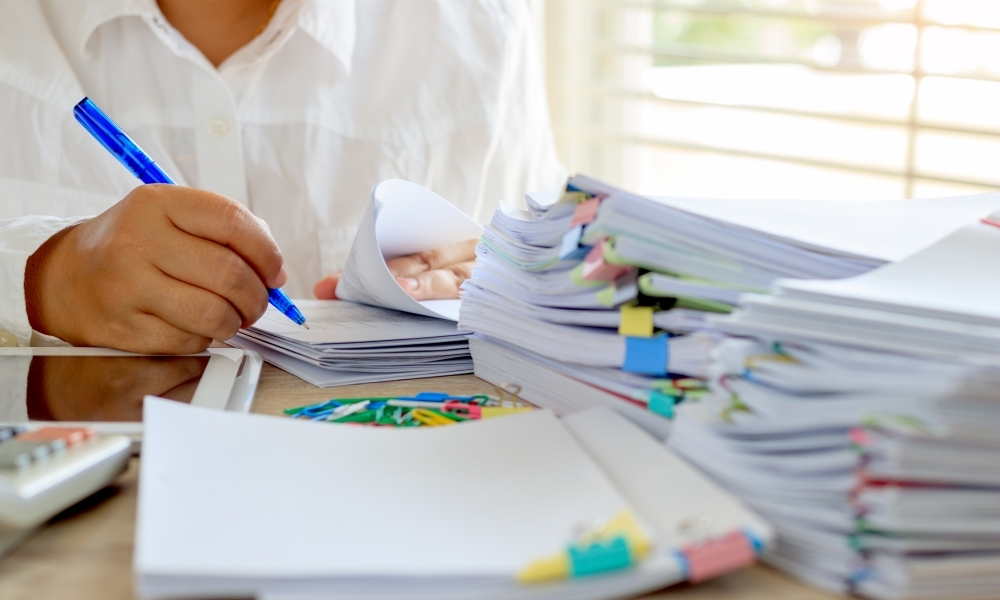

Use plausible distracters in multiple choice items

Multiple Choice Questions (MCQs) are one of the most widely used and preferred test item formats for science assessments, especially in classroom assessments.

While creating MCQs, teachers often overlook the plausibility of distracters (providing incorrect options) for such items. An item loses its actual purpose if distracters are forced, or if students can reject them at a glance.

Let us now look at a Grade 5 sample item where the distracters are implausible. In this item, the options A, B, and C are clearly implausible as they have no relation with leaking pipes. So, option D stands out clearly from the rest. This is a mistake that teachers should try to avoid.

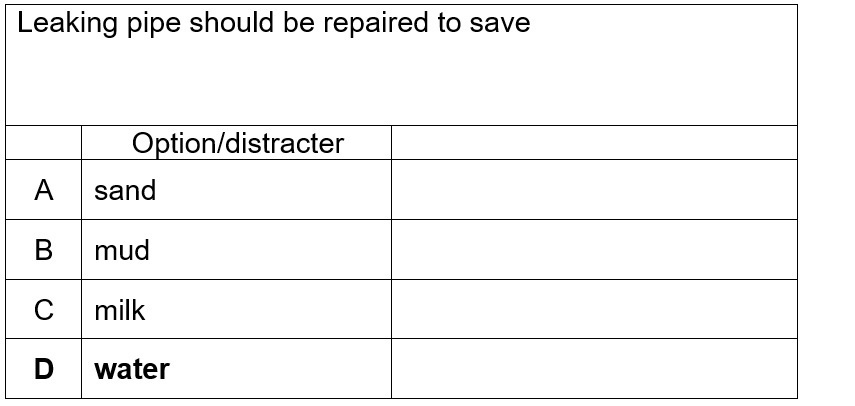

Avoid forced inclusion of images

Science teachers may find it hard to resist the use of an attractive and interesting graphic to display data in a science item. In the flow of it, what skips the teacher’s consciousness is the relevance of the picture in the item.

Graphics are resource intensive elements and consume valuable space in an assessment paper. Apart from that, the inclusion of a non-compulsory element in an assessment also demands crucial time from students without benefitting them. Therefore, teachers should be conscious about the use of graphics in science items − use only when necessary and not for cosmetic purposes.

Let us now look at a sample Grade 3 item where the image has no practical relevance. In this item, the options are enough for a Grade 3 student to identify the correct answer. Hence, adding a graphic is considered unnecessary.

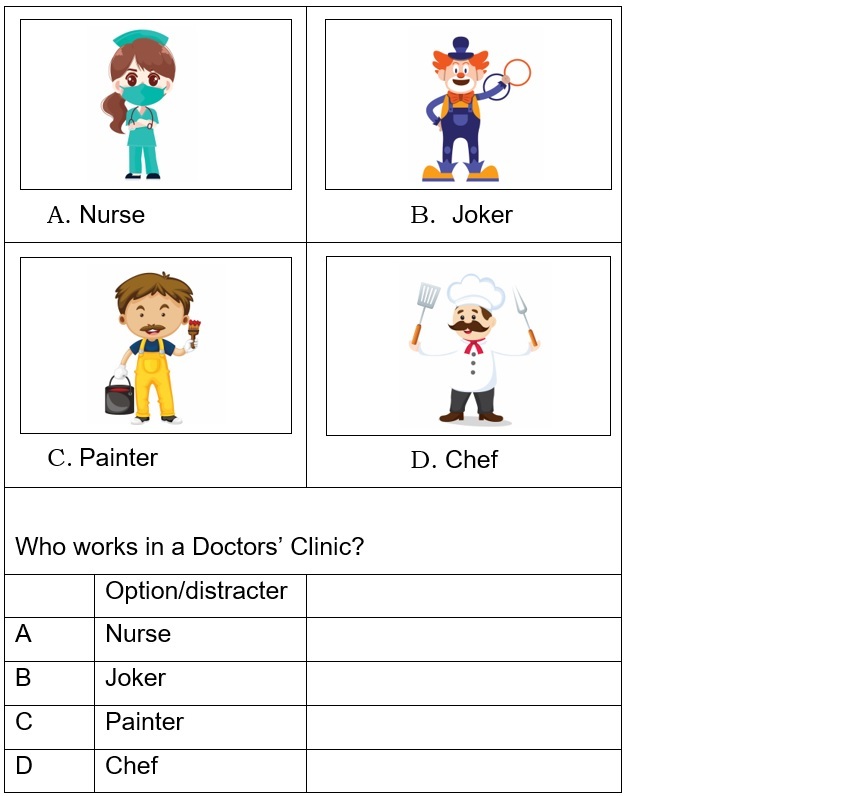

Be specific in an open response item task

Testing creativity skills of a student is an essential component of any good quality science assessment. Open response items are the most suitable tools for this objective.

Many teachers consider open response items as the ‘go to’ tools for testing higher-level cognitive skills in science. However, in doing so, what they often overlook is the design requirement of such items.

An open response item can invite a full spectrum of possible answers from students that the teacher may not have thought of while writing the item. Unlike MCQs, these items do not restrict students’ thought process to a limited set of options. The students are free to express their thought process, which may vary from one individual to another.

For such questions, awarding justified marks or scores for different responses may become challenging for the marker. To tackle this, a teacher should frame the question in such a way that they ask for a specific task and for which there is only one particular response. The rubric or scoring guide for such an item should have that one and only specific response.

For the Grade 6 item below, the question does not have a specific response. The student response can be multiple − including ‘the slope was not inclined enough’, ‘the ramp surface was rough’, ‘the toy car wheels were rough’, and so on. In such a situation, the markers end up using their individual understanding, rather than set criteria, for awarding a score to the response.

In addition to these three common mistakes, there are other important aspects of designing a science assessment.

Teachers can learn more about assessment item development from ACER’s forthcoming professional learning festival Jigyasa. There are a range of short, bite-size courses on smart assessments, 21st century skills, Edtech, and much more, all aligned to the National Education Policy 2020. To find out more about the festival and courses, and to register, visit: https://www.acer.org/in/jigyasa-learning-festival

Thinking about your own experience as a teacher or leader:

How do you learn about the quality standards of developing assessments?

Do you and your colleagues work in groups to develop assessments?

What steps do you follow to ensure that your assessment tool captures learning data accurately?