Scientific progress does not follow a linear path and although development is made, it is full of bumps and turns. There are parallels between this work and that of the advancement of evidence within the educational ecosystem.

I was a scientist for over nine years in the field of Bioinformatics. There were times when my experiments wouldn't work, and as I would hold up a blank piece of film in horror, all I could see was the many days of wasted time in preparation. I remember a scientist told me that experiments had an 80 per cent failure rate; my lived experience confirmed this.

To help with understanding experimental failure we would incorporate a control group, so we could tell what had failed or, in those rare moments of success, what had worked. If there was success, we needed to repeat the experiment another two times and produce the same result, so we could publish.

Evidence in education is continually growing. Often not in a consistent way, but in the same manner as science through new findings that are sometimes contradictory. This is why I am so passionate about educational research. I love the way the numbers change based on the incorporation of new studies into meta-analyses (Education Endowment Foundation, 2018b; Hattie, 2009; Marzano, Waters & McNulty, 2005).

Evidence helps strengthen the argument of quality programs and puts to rest those that have unproven results. This says that education is a dynamic field, like the experiments in my laboratory. Like science, it is driven by a spirit of curiosity (Deeble & Vaughan, 2018), by wanting to know what works, for whom, and in what circumstances.

The dynamic nature of educational evidence

An example of a growth in the evidence ecosystem I work in is the soon to be updated Teaching & Learning Toolkit. There are exciting changes in quite a few numbers, based on the incorporation of new studies. This is a process that occurs every 12 months with an international literature review. Sometimes this results in changes to previously held beliefs in educational evidence. As movement occurs based on new results, the Toolkit captures the live and dynamic nature of evidence in education. This can result in changes to months' worth of learning progress.

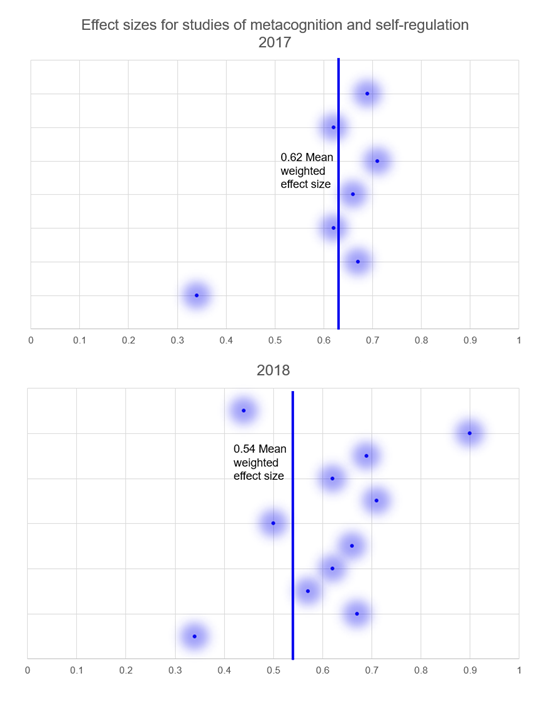

In the latest updates to the Toolkit, we will see changes to the approaches of metacognition and self-regulation, reading comprehension and mentoring. Metacognition and self-regulation will change from eight to seven months of learning progress (see Figure 1), while reading comprehension will gain an additional month of progress – moving from five months to six months. Why have these changes occurred? Additional studies drive the changes that alter the overall effect size, to result in a months' worth of learning progress.

Figure 1: Effect sizes for metacognition and self-regulation in 2017 and 2018.

Capturing good evidence

The best studies that help grow the evidence ecosystem are those that have appropriate designs, such as randomised controlled trials (RCTs) (Gorard, See & Siddiqui, 2017; Hutchison & Styles, 2010; Vaughan & Roberts-Hull, 2018). This draws a parallel with the use of control groups within scientific studies. RCTs are expensive, time consuming and require specific expertise but are critical to allow us to capture good evidence. Crucial to these studies are the teams of qualitative researchers working alongside the quantitative researchers, to determine how the changed learning conditions have impacted students' learning. For example, a RCT in the UK has looked at the impact of Embedding Formative Assessment that involved 25 000 students. It showed the importance of providing feedback that moves learning forward. The evidence showed students made two additional months' worth of learning progress (Education Endowment Foundation, 2018a).

Evidence for Learning is currently commissioning three of these studies. One of these is the independent evaluation of Thinking Maths , led by a team of researchers including Dr Hilary Hollingsworth and Dr Katherine Dix from the Australian Council for Educational Research. The research results from this evaluation have been released today.

Growth within the evidence ecosystem is fed by undertaking rigorous studies. The use of control groups in educational research helps us to be confident about the accuracy of the results. The light of curiosity also drives growth within the ecosystem; a constant pursuit to determine what works best for our students and in what circumstances.

References

Deeble, M., & Vaughan, T. (2018). An evidence broker for Australian schools. Centre for Strategic Education, Occassional Paper, 155, 1-20. Retrieved from http://www.evidenceforlearning.org.au/evidence-informed-educators/an-evidence-broker-for-australian-schools/

Education Endowment Foundation. (2018a). Embedding Formative Assessment. Retrieved from https://educationendowmentfoundation.org.uk/projects-and-evaluation/projects/embedding-formative-assessment/

Education Endowment Foundation. (2018b). Evidence for Learning Teaching & Learning Toolkit: Education Endowment Foundation. Retrieved from http://evidenceforlearning.org.au/the-toolkit/full-toolkit/

Gorard, S., See, B. H., & Siddiqui, N. (2017). Assessing the trustworthiness of a research finding. In The trials of evidence-based education: The promises, opportunities and problems of trials in education. London and New York: Routledge.

Hattie, J. (2009). Visible Learning: A synthesis of over 800 meta-analysis relating to achievement. London: Routledge.

Hutchison, D., & Styles, B. (2010). A guide to running randomised controlled trials for educational researchers. Slough: NFER.

Marzano, R. J., Waters, T., & McNulty, B. A. (2005). School leadership that works: From research to results: ASCD.

Vaughan, T., & Roberts-Hull, K. (2018). Why randomisation is not detrimental. Retrieved from http://evidenceforlearning.org.au/news/why-randomisation-is-not-detrimental/

How do you keep up with the latest educational research?

As a school leader, how does educational research inform your decision making?