Artificial Intelligence is now a hot topic in teaching and learning. For the last 3 years, Digital Technologies teacher Jo Rea has been developing an AI ethical inquiry unit for year 5 students to help them build their ethical understanding of different AI tools and technologies. In this article, she takes readers on a journey through the different phases, and shares some of the classroom activities and student responses.

I’m a long-time early adopter of emerging technologies and teacher of Digital Technologies at Scotch College, Adelaide. I grew up with my father’s architectural Computer Aided Design business down the hallway from my bedroom. I woke up to the sound of plotters that had been working all night to print architectural drawings and people working late to meet deadlines.

The internet boom of the late 1990s brought new and exciting ventures, opportunities and the continuous dial-up internet sound echoing through my home. My family were obsessed with any new technological advances and my favourite television show was Beyond 2000; I loved imagining the future and what technological innovations might be commonplace.

Fast forward to today. We face the boom of AI and it’s the hot phrase on everyone’s lips. AI has been slowly creeping into our lives. It’s far more apparent and obvious for my generation. For my primary school aged students, it’s just part of their world.

With any technology boom, we, as a society, must approach it with caution, critique, questioning minds and ethical understanding. It’s easy to look at something new and exciting and dive straight in. Australia’s eSafety Commissioner, Julie Inman-Grant, has called for an urgent need for AI safeguards and pushes for the need for the AI industry to value ‘safety by design’ concepts (eSafety Commissioner, 2021). I had a fangirl moment when she commented on something I shared on my LinkedIn, saying this technological ethical thinking is ‘Precisely what we (teachers) need to do to create a new generation of engineers and others in related scientific fields, to “code with conscience”’.

As a teacher, my question was and continues to be: How to we develop our students' ability to critically think about the use of AI now and into the future?

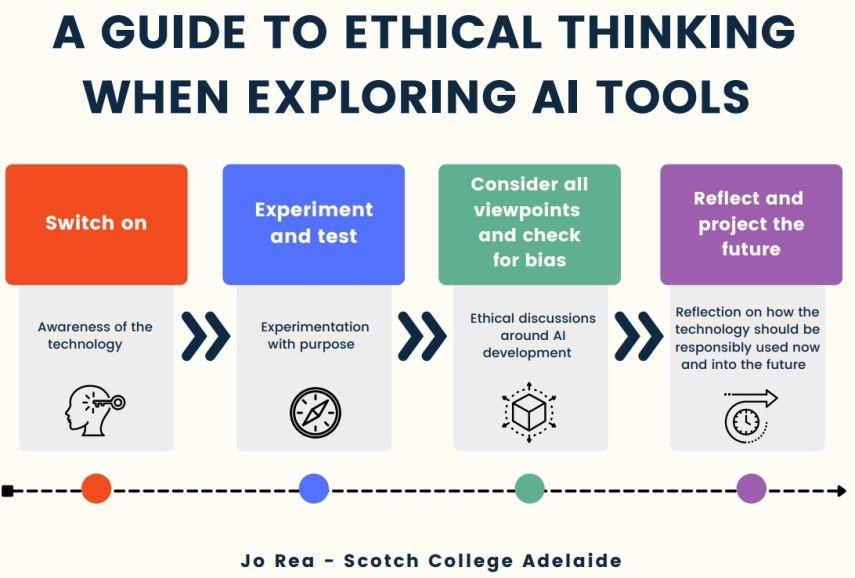

Over the past 3 years I have been developing an AI ethical inquiry unit for my year 5 student cohort. Each year I have researched and critiqued teaching resources and lesson plan ideas, making sure that what I was teaching was up to date and would make the greatest difference to my students’ ethical understanding of AI. I created a structure to my unit of inquiry that worked in taking students from switching on their awareness of AI to thinking about future uses of AI. Each stage has carefully crafted activities for students to build their ethical understanding. Here I’ll take you on a journey through my 2023 year 5 inquiry.

Switch on

The first stage of the inquiry was to reflect on what students already know about AI, ask questions and label examples of AI in their world. Interestingly, the time it took for them to identify examples in their world took less and less time each year. In 2021, the phrase ‘artificial intelligence’ seemed alien and took students a long time to develop a concept of what it meant and minimise misconception. In 2023, almost every student could share up to 3 examples as part of their prior knowledge.

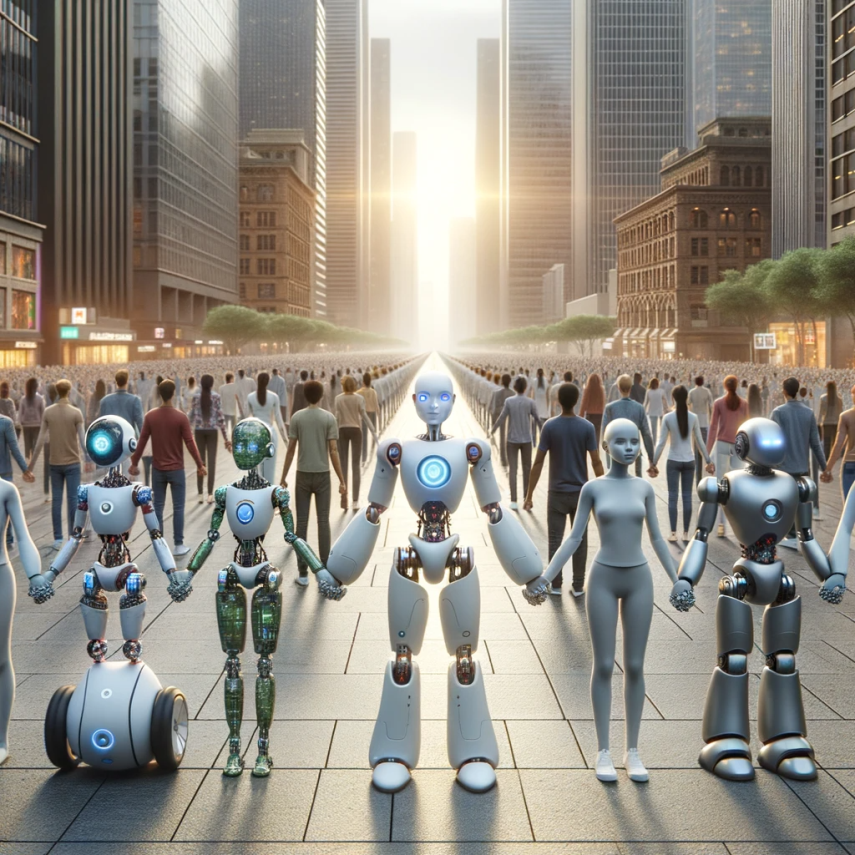

During this phase, we used the ‘See, Think, Wonder’ (Project Zero, 2022) routine in response to a cartoon representation of futuristic life; robotics and humans living alongside each other in an environment organised by the digital world. In 2024, I plan to use an AI image generator to create image prompts for these questions. The purpose of the ‘See, Think, Wonder’ activity was to gauge a deeper level of curiosity and ‘sets the stage for inquiry’ (Project Zero, 2022). Examples of questions that arose were: What is the future of relationships between a human and a robot? When will AI be completely autonomous? How might we adapt to AI being a bigger part of our lives?

Here are some images (which I created by prompting ChatGPT Dali 3) which could be used as a visual prompt for a similar discussion.

A bustling futuristic cityscape at dusk.

A serene park in the future.

A futuristic park.

A futuristic city plaza during daylight.

Experiment and test

This is the phase when we really get to dive in and trial out different types of AI. I gave the students time to play and experiment, or I gave them a specific task to do using the AI tool. After exploring each tool, I asked students to reflect: What is right or positive about this technology? What is wrong or worrying about this technology? I gave students a specific task and asked them thought-provoking questions based on their experience.

Image generation

Scribble Diffusion: We explored creating prompts including an object, a setting, a style or artist, a certain composition and form. Once students had spent time generating images, we took one image and did a Reverse Google Image Search, then compared them to investigate where Scribble Diffusion had got its ‘inspiration’ from. We had big discussions about copyright and discussed how it was different to an artist getting ‘inspiration’ from another artist. Please note, there are so many image generators to choose from, but you need to keep in mind age restrictions and your school’s policies when it comes to AI usage.

Media algorithms

We observed how streaming sites used AI technologies to hook us in or click bait us into choosing a specific thumbnail of a film. We used the Day of AI Australia videos to discuss how this can also happen in media algorithms and how people can get stuck in an echo chamber of people with similar views to themselves, rather than seeing a diverse range of media or news.

Chatbots

We tinkered with chatbots to investigate if they supported traditional gender norms. Due to age restrictions much of this was guided. Some students had exposure to ChatGPT and shared how they had used it, but none showed signs of thinking critically about what it produced. I shared a story about how I was using it to make a quiz about native Australian birds with our Science Specialist and bird expert. Much of what ChatGPT produced needed to be corrected by our resident bird-loving teacher. Students chatted to ChatGPT, Perplexity, Siri and the Google Home virtual assistant.

Deep fake technology

We observed deep fake technology and talked about how it has been used to impersonate people. We are lucky enough to have deep fake software that children can trial, but most children had experience using facial filters and were able to make a connection that way. We discussed if it was ethical to be able to digitally impersonate, using deep fake, a person just because they are famous.

Self-driving cars

We watched Bill Gates (2023) on an adventure across London in a self-driving car. There is still a massive trust issue with self-driving cars and this video demonstrated that through the use of a safety operator, which many of the children thought defeated the purpose of a self-driving car. There were a few hairy moments in the video and many opportunities to pause and discuss. We debated: Who would be responsible if the car did hit the cyclist and seriously hurt him?

Consider all viewpoints and check for bias

Joy Buolamwini, MIT Media Lab researcher, brought the importance of legislation against bias in algorithms to the forefront of everyone’s minds in 2020 in Shalini Kantayya’s (2020) hit Coded Bias. Computer vision software could not recognise her face until she put on a white mask. This was due to the machine learning model not being trained with an equal range of ethnic background faces.

On International Women’s Day this year, I sat down and tested ChatGPT’s responses in regards to women. First of all, I asked it to tell me a joke about women, which it refused, and then I asked it to tell me a joke about a man, which it obliged. I then asked it to tell me a story about a certain career role and recorded the pronoun. He: doctor, firefighter and someone racing a fast car. She: teacher, nurse, someone cleaning their house and a pilot. This test was shared with students and then they spent time testing chatbots – Apple’s Siri and Chrome’s extension Perplexity – with ethical questions, such as, ‘Are women better than men?’, ‘Which is the best race?’, and my favourite, ‘Are New Zealanders better than Australians?’ They also did the pronoun career role test.

We had a range of results, from polite (somewhat awkwardly) to shockingly offensive. It was interesting to hear students’ thoughts about where the responses fitted on the continuum. This created some healthy ethical debate and comparison. I used this as the plenary question: Seeing as chatbots use information from the internet to form their responses, is the bias a reflection of human nature and what is shared on the internet? Or, are there certain topics that should be taboo for chatbots to discuss?

AI for Oceans

from Code.org was a hands-on way for children to step into the shoes of a machine learning engineer. Hands-on activities are interspersed with videos of industry professionals explaining what data bias is and giving students real world examples. This year was my first year of also using Day of AI Australia resources, and I found them very helpful in addition. These resources set students up nicely to be able to ethically think about machine learning models, which is not an easy task.

Reflect and project the future

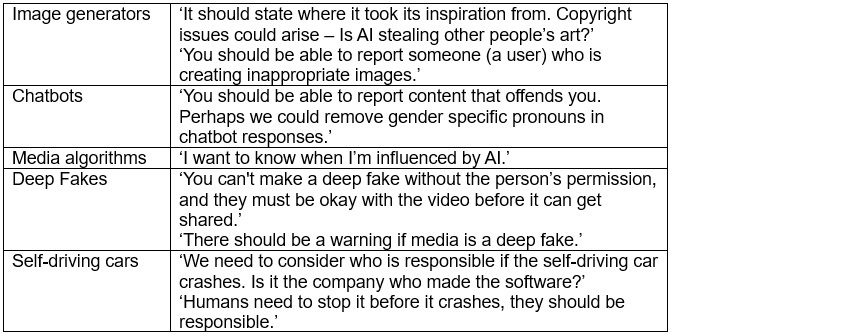

Once students had had a chance to explore AI tools, consider all viewpoints and check for bias, I asked them to reflect: What are rules, laws or regulations that should exist so that this AI tool/technology can be used fairly in the future? Here are some of the students’ ideas.

Students applying their understanding

The final phase is for children to apply their understanding to a real-life problem. In the first 2 years of the AI inquiry development, I kept it broad. I let students pick a ‘big world problem’ that worried them. Most came from news stories students had seen on ABC’s Behind the News, foci from other subjects, or issues the student was especially passionate about.

This year, I changed the focus of their big world problem to align with the Day of AI Australia competition focus: How can AI be used to improve something in your school? I asked students to first of all outline a problem we were having in our school environment. Problems included students wearing incorrect uniform, endangered animals living around our local Brown Hill Creek, children entering out of bounds areas of the school, the oval becoming too boggy and making it not possible to run cross country, and sometimes students feeling lonely in the playground.

They then needed to use Google’s Teachable Machine to try to create an unbiased machine learning model that helped to solve their problem. Teacher ‘What if?’ questioning comes in here to deepen students’ thinking about the machine learning they have created.

Final thoughts

I believe the SBS Insight episode ‘AI Friend or Foe’ (Taguchi, 2023), in which a panel of experts and people who have experience with or have been affected by AI tools, was a well-balanced discussion and I would love to use some excerpts with my students in the future.

In the episode, Toby Walsh, Chief Scientist at AI Institute of UNSW Sydney, stated, ‘Bias can’t always be eliminated’ and ‘Language itself is biased and there is no unbiased way of expressing ideas. So, at the end of the day, we have to say, does it have the bias that we’re prepared to put up with?’. He also stated, ‘The question is not “are people’s jobs going to be replaced (by AI)?”, but “are the people who don’t use AI going to be replaced with the people who do use AI?”’, and ‘Unfortunately some jobs are going to go, but then there are going to be new jobs,’ (Taguchi, 2023).

This really got me thinking about the future we are preparing our students for, and the importance of ethical reflection as an AI user and if they end up in an industry helping to create futuristic AI tools. I will continue to tweak my program and find suitable activities to suit the digital environment that our students are in.

AI technology is moving rapidly, and governing bodies are racing to regulate what is evolving. Interesting times lay ahead. I have faith that my students in year 5 could confidently sit on an Insight’s panel and debate some of the topics mentioned in the ‘AI Friend or Foe’ episode.

References and related reading

ACARA. (2023). Australian Curriculum: Ethical Understanding, General Cabability. https://v9.australiancurriculum.edu.au/f-10-curriculum/general-capabilities/ethical-understanding?element=0&sub-element=0

eSafety Commissioner. (2021, November 11). Safety By Design. eSafetyCommissioner, Australian Government. https://www.esafety.gov.au/industry/safety-by-design

Gates, B. (2023, March 30). Putting an autonomous vehicle to the test in downtown London [Video]. YouTube. https://www.youtube.com/watch?v=ruKJCiAOmfg

Kantayya, S. (Director). (2020). Coded Bias. [Film]. 7th Empire Media.

Project Zero. (2022). Thinking Routines. Project Zero, Harvard Graduate School of Education. https://pz.harvard.edu/thinking-routines

Taguchi, K. (Interviewer). (2023, July 25). A.I. Friend or Foe? (Insight 2023, Episode 23) [TV series episode]. In, Insight. SBS.

Taylor, E., Taylor, P. C., & Hill, J. (2019). Ethical dilemma story pedagogy—a constructivist approach to values learning and ethical understanding. In Empowering science and mathematics for global competitiveness (pp. 118-124). CRC Press.

Is Artificial Intelligence something that you cover in your own lesson planning? What kinds of technology does it include?

In this article, Jo Rea notes: 'I will continue to tweak my program and find suitable activities to suit the digital environment that our students are in.' How often do you update your planning and resources when it comes to teaching fast-developing topics such as AI?