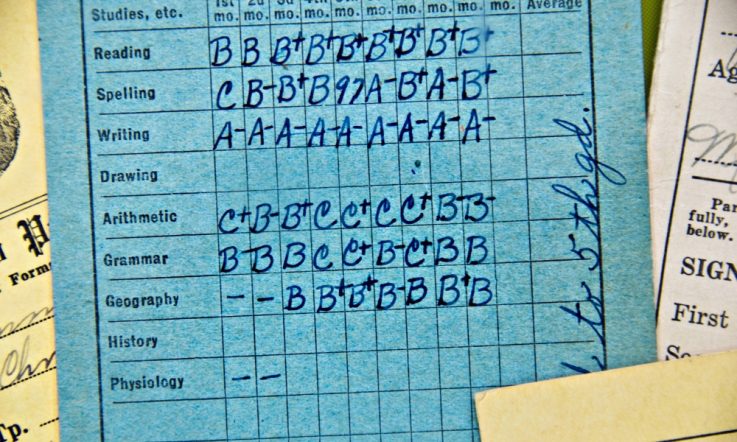

In the first article in this series related to ACER's Communicating Student Learning Progress project, we reviewed some of the recent history of student reporting practices in Australia, including the discontent expressed by various stakeholders about the measures used in reporting, the level of detail provided, the accessibility of language used, and the intelligibility of information that reports have presented.

Here we examine more recent trends and current reporting practices in schools, specifically the growing use of electronic systems and tools. We also consider the opportunities and possibilities that these systems offer for reporting in the future, particularly in light of recommendations presented in the recent Gonski report Through Growth to Achievement: Report of the Review to Achieve Educational Excellence in Australian Schools related to reporting both learning attainment and gain (Australian Government Department of Education and Training, 2018).

The end of the semester report?

In the last 10 years, schools have increasingly adopted sophisticated electronic management systems with multi-user functionality. Variously referred to as School Management Systems (SMS), Student or School Information Systems (SIS), Learning Management Systems (LMS) or Virtual Learning Environments (VLE), what unites these systems is that they provide the capacity for schools to report on student learning, both to students and to parents. Many of these products allow schools to generate semester reports automatically, simply by collating and aggregating learning data and teacher feedback comments stored in a teacher's ‘mark book'.

According to several product providers, the vast majority of their client schools still produce semester reports, satisfying the mandated government requirement that all schools produce two summative written reports per year. However, all acknowledge that the semester report is quickly changing. In place of detailed comments and information about a student's performance, many schools are publishing more succinct, auto-generated academic transcripts, which are sometimes little more than graphs and grades.

James Leckie, co-founder and director of Schoolbox, observes that ‘there is certainly a trend towards schools removing the requirement for teachers to enter any additional information at the end of the semester'. The centrality of the traditional semester report as the cornerstone for communicating to parents about their child's learning, seems to be ‘a paradigm that is changing' – so says Daniel Hill, director of sales at Edumate. From his dealings with client schools, Hill suspects many would drop the practice of semester reporting entirely, were it not for the mandated requirements.

Continuous reporting

The waning effort put into the production of semester reports in some schools is explained by an increasing preference for the new reporting functionality these electronic tools offer –continuous online reporting. Continuous reporting refers to the practice of reporting in regular instalments. Typically, at key moments throughout the semester, teachers provide updated assessment information to the system online, which is then made visible to students and parents.

The main benefit schools perceive in continuous reporting (sometimes referred to as progressive reporting) is the timely manner in which parents are informed of their child's achievement. It is often seen as ‘too late' at the end-of-semester for a parent to be formally notified of how their child has been performing. In addition, the added capacity to upload annotated copies of the student's work, include a copy of the assessment rubric and type limitless feedback comments to the student (visible also to parents), is seen as vastly more informative than the restrictive summary comments usually offered in a semester report.

Despite the potential for continuous reporting to be seen as burdensome for teachers, many schools are instead seeing it as a trade-off of teacher time, particularly in secondary settings. Assessing several tasks and providing feedback to students throughout the semester is already established practice in secondary schools. Travis Gandy, general manager of operations at Compass, suggests that one of the more popular aspects of continuous reporting for teachers is the lack of an ‘end of semester rush to get the reports out'. If detailed feedback that is offered to the student mid-term can also, by a click of a button, be made visible to parents as well, then avoiding a re-hash of this feedback for the parents at the end of semester can be seen as a win-win.

Progressive reporting versus reporting progress

Beyond the detail, frequency, and the timeliness of information that continuous online reporting allows, product providers go to significant lengths to add new reporting features and functions. In line with much of the academic research into high-impact teaching, these features enable such things as the creation of electronic rubrics, differentiated assessment tasks, narrative teacher feedback, digital annotation of student work and student self-evaluation and reflection.

However, research suggesting that, in any given class, the most advanced learners can be as much as five or six years ahead of the least advanced learners, has put mounting pressure on schools to be able to assess – and communicate – not simply a child's performance against age-based curriculum standards, but the progress they make in their learning from the point at which they start. This was reflected in the recent Gonski report, which includes the following recommendation: ‘Introduce new reporting arrangements with a focus on both learning attainment and learning gain, to provide meaningful information to students and their parents and carers about individual achievement and learning growth.' (Australian Government Department of Education and Training, 2018, p.31).

Such a recommendation presents significant challenges for schools. In a conference paper soon to be published (Hollingsworth & Heard, in press), we identify two key findings from our early analysis of samples of student reports collected from schools and sectors around the country. One of these findings is that while schools often use the word ‘progress' within reports, most of what they report tends to focus on performance – or attainment – rather than learning gain. As we outline in that paper, one possible explanation for this is that in some schools, a child's performance over time is erroneously considered synonymous with their progress over time.

Consultation with providers of LMS and SMS-like products would appear to support this view. Demand still exists for electronic reporting features such as grade point averages, student-to-cohort comparison charts, and ‘learning alerts' that track the performance of students in their assessments longitudinally and notify teachers of any scores a student obtains that fall significantly outside their usual performance. It is conceivable that schools might use functions such as these to track or compare student performance, but construe them as indicating how a student is ‘progressing'.

By implication, it may even be the case that by simply engaging in continuous – or progressive – instalments of reporting, schools misconceive this also as reporting ‘progress'. While providers of continuous reporting technologies are seeking to find solutions that would help schools to represent learning gain as well as attainment in their reports, many schools are, according to Daniel Hill, ‘just at the beginning of that journey'.

James Leckie agrees. While he has worked with client schools to assist them in using electronic rubrics for assessment that could theoretically measure – and track – progress, he notes that ‘it does rely on schools implementing standards-aligned rubrics' in the first place. Other providers, such as Sentral, enable schools to collate work sample portfolios for continuous assessment, which could theoretically be used to demonstrate gains in student learning and skill development over time. Both Sentral and Compass provide continuum tracker applications which, Travis Gandy at Compass explains ‘allows schools to tick off particular [achievement standard] outcomes' over time. Gandy also points to Compass' data analytics tool which stores external standardised assessment data (such as NAPLAN, PAT and OnDemand testing), useful for making assessments of progress along a learning or curriculum continuum. However, Gandy notes: ‘We often find it under-utilised by our schools'. Our own analysis of school reports in the Communicating Student Learning Progress project revealed only one school that reported standardised testing data.

Nevertheless, the functionality that electronic systems and tools already offer provides some exciting opportunities that – if re-purposed – may lead schools towards improvements in reporting, along the lines of the recommendations made in the Gonski report. For example, the capacity for teachers to report in regular instalments in place of (or perhaps in addition to) semester reports, to collate digital samples of student work in electronic portfolios as evidence of growth over time, to digitally annotate this work to describe the features that show increased proficiency, to track these gains on a curriculum continuum or digital rubric that reflects a progression of learning within a subject, and to correlate such evidence against stored data obtained by external standardised testing, means that schools are already well-equipped with electronic tools to enable them to communicate learning progress, in the true sense of the growth in understanding, skills and knowledge a student makes over time, irrespective of their starting point.

What limits schools in this endeavour, therefore, is not the reporting technology, but the curriculum design, delivery, and assessment practices inherited from an industrial model of schooling, with its narrow focus on students' performance and achievement against year level expectations, rather than the gains that learners make over time. A broader focus, coupled with a reimagining of how existing technologies could be used, provides a clear first step towards the improvements in reporting that would enable us to better value and communicate the growth our students make.

Dr Hilary Hollingsworth and Jonathan Heard will be presenting at the 2018 Research Conference. Their session is titled Communicating student learning progress: What does that mean, and can it make a difference?

References

Australian Government Department of Education and Training (2018). Through Growth to Achievement: Report of the Review to Achieve Educational Excellence in Australian Schools. Commonwealth of Australia: Canberra. Retrieved May, 2018, from https://docs.education.gov.au/node/50516

Hollingsworth, H., & Heard, J. (2018). Does the old school report have a future? Teacher Magazine. Retrieved from https://www.teachermagazine.com/articles/does-the-old-school-report-have-a-future

Hollingsworth, H., & Heard, J. (in press). Communicating Student Learning Progress: What does that mean, and can it make a difference? Paper to be presented at ACER's Research Conference 2018, Sydney, August 2018.

Think about the way your school delivers reports to parents and carers. Does technology impact the way you communicate results with them? Has technology changed the nature of reporting?

How well does student reporting in your school – in whatever form it takes – really communicate student learning progress?