Thank you for downloading this episode of The Research Files podcast series, brought to you by Teacher magazine – I'm Rebecca Vukovic.

Many people believe that because young people are digital natives, they are also digital-savvy. But a new report from researchers at Stanford University's Graduate School of Education has found that young people do experience difficulties when it comes to evaluating information they find online. In fact, this research, which tested middle school, high school and college level students, found that 80 per cent of participants thought that sponsored articles were actual articles, and had a hard time distinguishing where this information actually came from. One of the co-authors of the report, Sarah McGrew from the Stanford History Education Group, joined me on the line from California to discuss her team's findings.

Rebecca Vukovic: Sarah McGrew, thank you for joining Teacher magazine.

Sarah McGrew: Thanks for having me.

RV: We're here to talk today about the Stanford University study titled Evaluating Information: The cornerstone of civic online reasoning (PDF 3.4 MB). There was a really interesting line from that report, and I'll read it straight from the executive summary: ‘Our “digital natives” may be able to flit between Facebook and Twitter while simultaneously uploading a selfie to Instagram and texting a friend. But when it comes to evaluating information that flows through social media channels, they are easily duped.' So Sarah, I was wondering, why do you think it is that young people are so easily fooled by fake news?

SM: I certainly think they are, I also think although our study covered young people and I think we often assume, like we argued, that young people are just as fluent and proficient at evaluating information as they are at surfing the internet. But this would probably apply to adults too and hopefully someone will study adults and see, I think probably, some similar shortcomings, although I think often we assume that adults aren't as fluent online because they haven't grown up with technology in the way that our young people have. But I really think that technology is outpacing us – that we've had these traditional means of evaluating information, that we've relied on information gatekeepers like newspaper editors and publishers and we've had some sort of traditional markers of authority that we've relied on like reference lists or professional appearance, things that have served us well in eras before the internet. But technology is so quickly outpacing us and changing and we just haven't kept up with it. And I think particularly when we're talking about contentious social and political issues that young people and adults have really strong opinions about, it's easy to be fooled if you see something that aligns with what you already believe or what you want to believe. It's easy, especially if you haven't been taught to trust something before evaluating it thoroughly.

RV: And as you've said in the report, the times have changed and you mentioned just earlier that ordinary people once relied on publishers and editors and experts to vet the information they consumed. But, nowadays, we access information from countless different sources. So, I was hoping you could to explain to me, why it's important that we are actually taught to evaluate information to distinguish what's reliable and what's not.

SM: I think it's monumentally important because obviously people are relying more and more, especially young people, on the internet as their primary source of information about the world and the evidence we have so far shows that they're not very good at it – at deciding what's true and what's not. And that has an impact on the personal decisions you make, ones that are pretty low stakes, but also has high personal costs if you make decisions based on bad information and high public cost. We are particularly concerned about the fact we live in a democracy where the level of information of our fellow citizens has impact on the choices they make and the actions they take. And if we're not basing our actions and our decisions on good information, I think that's a real potential threat to democracy.

RV: That's really interesting. Just to go back a bit, I was hoping you could briefly talk me through how you actually went about completing this research – so the steps you took along the way.

SM: We followed a pretty careful and exhaustive process of assessment design where we first described and laid out the domain that we're interested in assessing, and then draw up prototypes of the assessments to very quickly get them up to piloting. We know that we as adults may not be great at designing tasks that are really clear for students and assess what we want to assess, so we'd start with small groups of students piloting our tasks and then based on their responses we get revised the tasks and re-pilot until we're happy with the tasks. And then we do think aloud interviews with students so we sit down with the tasks and the student is asked to complete it and then talk us through their thinking, again to make sure that the task is tapping what we intended it to tap. And then once we were pretty happy with the task, we send it out to a large number of students, again to ensure the task is working like we think it should and to start to get a sense of what students can do with the task and that is what our report is based on – those large numbers of students taking the task once they were complete.

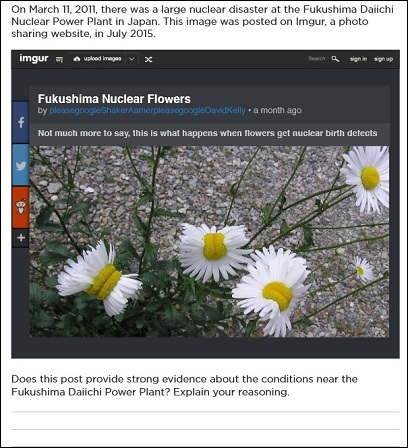

[In this test item, students were required to ask a basic question: Where did this document I'm looking at come from? Image supplied.]

RV: And one thing Sarah I got from reading the report and I guess reading the response to the report as well, is that people really aren't aware of this as a problem. I actually read in the report as well that you and your co-authors had little sense of the depth of the problem when you first began this study. So I was hoping you could talk me through some of the next steps you've taken or will be taking to bring awareness to this problem?

SM: Yeah well I think we've been overwhelmed and happy about the response to the report, that people are really taking it up and starting to realise, along with us, what a big problem this is and hopefully how much of a response requires. So we're working on a couple of things. One is we're building more assessments. We have a set of 15 that we will hopefully be releasing by the end of this school year [in the US], along with accompanying student responses and rubrics, but we're also I think even more critically starting to think about curriculum – so thinking about how to support teachers in using these tasks in their classroom but also to go about teaching students to do a better job of evaluating information online. We're sort of just in the beginning stages of that work. We're trying to think carefully through what kinds of subject areas it fits best within and what really powerful, high quality lesson plans would look like to help students to do a better job learning to evaluate information.

RV: Fantastic! Finally then Sarah, what can individual teachers do to help their students to become more digitally literate?

SM: I think the first thing I would say is don't make assumptions about what students do and don't know or can and can't do online. The premise of our report, I think it's easy to assume that young people are really fluent online and maybe even more fluent than we are which is scary to take on as a teacher. But I think this report shows that's not necessarily true and that teachers should have some sort of way of checking where their students are before they take on teaching this.

And then I would say focus on just a few of the most important questions or ways of thinking that you want students to acquire. So, at least in the US, a common way of teaching website evaluation is these long checklists with 20-30 ‘yes' or ‘no' questions that students answer as a means to evaluating. … I think it's problematic to ask students to go through such an exhaustive evaluation process that they won't necessarily replicate on their own. So, we're just trying to think about just a few things – what are the key questions that you'd want students to ask, just if they're going to take a few extra seconds to evaluate something before they retweet it or post it on Facebook. What would you want them to ask?

One of the questions we think is really important is ‘who is behind this and what's their motivation?' So, helping students learn how important that question is to ask but then also how you go about answering it online, because the internet does provide lots of resources to help us figure out who is behind websites and information that's posted. So, that's just one example and like I said we're still very much in development mode. But, I think there are certainly things teachers could do now to help students both acquire ways of thinking and dispositions to think carefully about information but also the specific skills they need to assess it.

RV: There's some really practical advice there. Well Sarah McGrew, thanks for sharing your research with Teacher magazine.

You've been listening to an episode of The Research Files, from Teacher magazine. To download all of our podcasts for free, visit acer.ac/teacheritunes or www.soundcloud.com/teacher-acer. To find out more about the research discussed in this podcast, and to access the latest articles, videos and infographics visit www.teachermagazine.com.au

References

Wineburg, S. & McGrew, S. (2016). Evaluating information: The cornerstone of civic online reasoning. Stanford History Education Group. Retrieved from http://sheg.stanford.edu/upload/V3LessonPlans/Executive%20Summary%2011.21.16.pdf

In what ways do you teach your students to evaluate information online? Do your students recognise the difference between sponsored content and genuine editorial?

Sarah McGrew says ‘one of the questions we think is really important is who is behind this and what’s their motivation?’ In what ways could you use this question to guide your students when they read content online?