Developing literacy skills in a digital world

In 2000, the first assessment cycle of PISA (Programme for International Student Assessment) focused on reading literacy, and in 2018 PISA assessed reading literacy as a major domain for the third time. The PISA definition of reading literacy, developed in 2000 and still used in 2018, is ‘… understanding, using, reflecting on and engaging with texts in order to achieve one’s goals, to develop one’s knowledge and to participate in society’.

So much has changed in the way we view literacy over this time. Much of the reading that our teenagers do now is digital, rather than written, and the COVID-19 pandemic has accelerated the use of digital technologies for education. Students’ time on the internet – both in and out of school – continues to grow, from an average across Australia of 28 hours in 2012 to 40 hours in 2018 (OECD, 2021, p. 21), more than the average adult working week.

The OECD argue that ‘literacy in the 20th century was about extracting and processing pre-coded and – for school students – usually carefully curated information; in the 21st century, it is about constructing and validating knowledge’ (OECD, 2021, p. 3). Students no longer go to an authoritative print source to obtain information – they search on the internet, where anyone can publish any content they wish, without any form of verification. What does this mean for the assessment of reading literacy in this new world of information?

The change over the last 20 years in what and how students read has emphasised the importance not only of assessing students’ capacity to read, but also what they have learned about the credibility of what they read. For example, how well are they able to distinguish fact from opinion, or detect biased information or malicious content such as phishing or fake news – skills essential in a world flooded by information from a variety of sources.

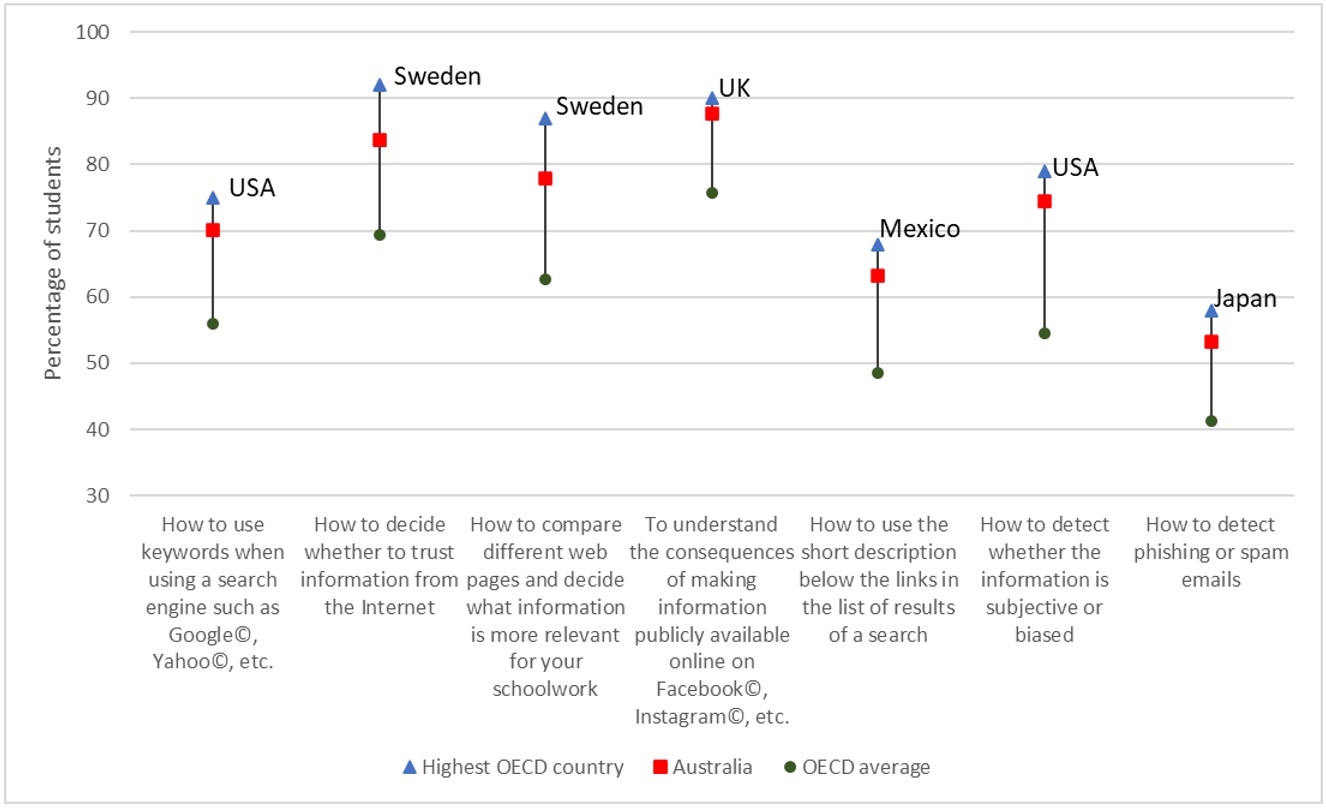

PISA 2018 asked students whether during their entire school experience they were taught:

- how to use keywords when using a search engine;

- how to decide whether to trust information from the internet;

- how to compare different web pages and decide what information is more relevant for your schoolwork;

- to understand the consequences of making information publicly available online on social media;

- how to use the short description below the links in the list of results of a search;

- how to detect whether information is subjective or biased; and,

- how to detect phishing or spam emails.

Australian students reported having learned all of these skills in school to a significantly greater extent than on average across the OECD countries.

In Australia, as in many countries and across the OECD on average, the most often-taught skill was probably that most relevant to students in this age group – understanding the consequences of making information publicly available online on various social media platforms (UK 90%, Australia 88%, OECD average 76%). Also important in most countries, including Australia, was how to decide whether to trust information from the Internet (Sweden 92%, Australia 84%, OECD average 69%), and how to compare different web pages and decide what information is more relevant for your schoolwork (Sweden 87%, Australia 78%, OECD average 63%) (Figure 1).

Figure 1. Students’ reports that they were taught these skills during their schooling

While the percentage of students who responded that they had been taught how to detect whether information is subjective or biased is relatively high (USA 79%, Australia 75%, OECD average 54%), it is not known how well they actually do this.

A skill that appears not to have been taught to the same extent – internationally as well as in Australia – is how to detect phishing or spam emails (Japan 58%, Australia 53%, OECD average 41%), which may be of concern in times of increasing use of such techniques for installing malware, particularly ransomware, onto remote host computers.

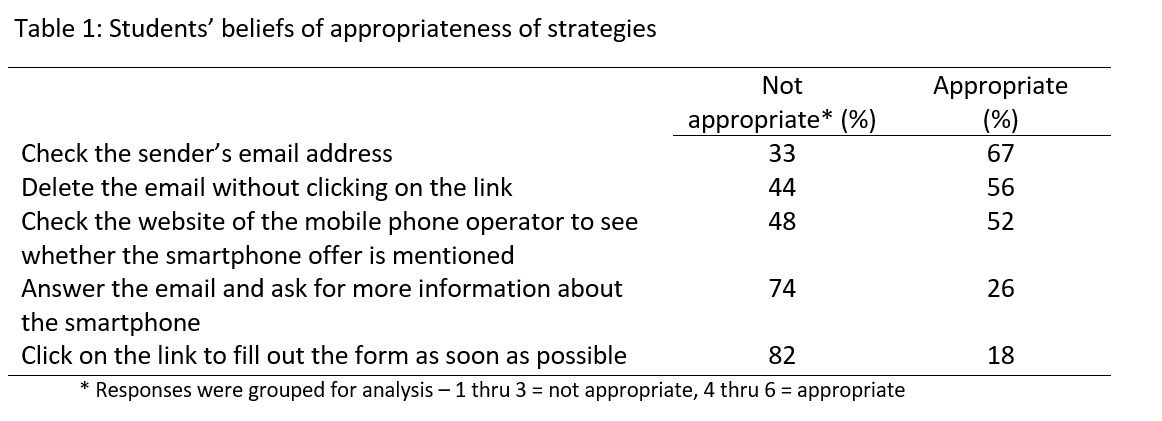

PISA 2018 also included several scenario-based tasks where students were asked to rate how useful different strategies were to solve a particular reading situation. One of these scenarios asked students to click on the link of an email from a well-known mobile operator and fill out a form with their data to win a smartphone. The purpose of this task was to assess students’ knowledge of strategies for Assessing credibility.

Students were asked how appropriate they believed that the following strategies were in reaction to this email, on a six-point scale ranging from Not appropriate at all (1) through to Very appropriate (6). Table 1 shows the responses from Australian students.

The strategies were also rated by reading experts via multiple pairwise comparisons. This rating resulted in a hierarchy of all strategies for each task based on all the pairs agreed upon by at least 80 per cent of the experts. For this scenario, the experts’ ratings resulted in the order as they are listed in the above table. The final scores assigned to each student for each task ranges from 0 to 1 and can be interpreted as the proportion of the total number of expert pairwise relations that are consistent with the student ordering. The higher the score, the more a student chose an expert-validated strategy over a less useful one. Finally, the index was standardised to have an OECD mean of 0 and a standard deviation of 1. The mean index score for Australia was 0.1, which was significantly higher than the OECD average. The United Kingdom had the highest score of 0.3.

The global pandemic has shown what is possible in terms of the volume of misinformation able to be generated and spread widely via the internet, and for this, it is essential that young people develop the level of reading literacy skills necessary to triangulate information and detect bias. In addition, phishing emails and their offshoot ransomware necessitate a range of digital literacy skills.

References

OECD (2021), 21st-Century Readers: Developing Literacy Skills in a Digital World, PISA, OECD Publishing. https://doi.org/10.1787/a83d84cb-en